This is the last article you can read this month

You can read more article this month

You can read more articles this month

Sorry your limit is up for this month

Reset on:

Please help support the Morning Star by subscribing here

EARLY in Oppenheimer, the protagonist, who led the US atomic bomb development programme during WWII, attempts half-heartedly to poison one of his supervisors by injecting an apple with poisonous chemicals.

As a science student in an experimental lab, he has easy access to these chemicals. As the film explores, these poisonous materials are not the most deadly things that Oppenheimer works with in his life. The Manhattan Project that Oppenheimer and many thousands of others worked on in strict secrecy culminated in the first atomic weapons: two bombs that killed at least 130,000 people in Hiroshima and Nagasaki.

The rationale behind the secrecy was obvious. They were designing the deadliest bomb anyone had ever seen, and trying to do so before the Nazis. Since then, there have been no further uses of atomic bombs in war, although how to build an atomic weapon is no longer secret.

One declassified report calculated that 100 detonated nuclear warheads would be enough to kill everyone in the world. There are currently around 13,000 nuclear warheads stockpiled.

Although there is a convention banning nuclear weapons, many countries have not signed it. In contrast, the Chemical Weapons Convention prohibits the “development, production, stockpiling and use” of chemical weapons, and has been signed by the large majority of countries, although its value is somewhat questionable.

Agent Orange, for example, used by the US in Vietnam causes trees to drop their leaves; it also causes many health problems in humans too, including leukaemia and birth defects.

But the Convention has a clause allowing for the use of chemicals like this, or even of things like napalm, if there are military personnel under the trees (Article 2(4) of Protocol III), or in the US ratification, can be ignored altogether, if the use of chemical weapons — even if against civilians — would save (other) civilian lives.

Poisons and toxins are far more varied in their potential design than bombs, and that design is undergoing radical change right now.

One of the biggest developments in 20th-century science was molecular biology, in which complex biological molecules are understood as 3D structures. This is, for example, the work for which Francis Crick, Rosalind Franklin and James Watson were recognised — the discovery that the DNA molecule was structured in the shape of a double helix.

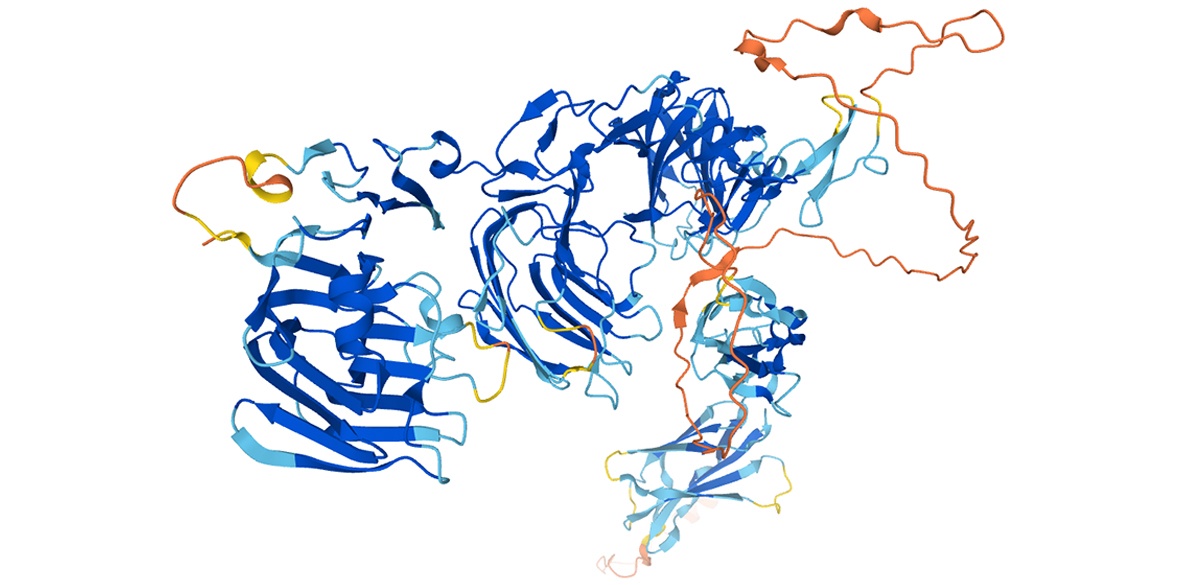

It's the data inside the double helix that can be read out in cells as proteins: long strings of amino acids that fold up to produce some of the largest and most complex and varied molecules we know.

Every unique string of amino acids has a different physical shape, which determines exactly how they work, inside the body and outside of it. Working out these structures involves thinking spatially about how the different parts could fit together stably, something that computers can be built to do. But it’s not as simple as that makes it sound.

Proteins are generally between 50 and 2,000 amino acids long. For a small protein with just 100 amino acids, the time taken for a computer to work out all the possible different configurations of the structure (let alone to decide which is the right one) is longer than the age of the universe.

Rather than using this unfeasible brute force approach, structural chemists test molecules for other data to give clues to guide and assess the computer simulations. The problem of solving protein structure is one of the great challenges of molecular biology.

The Nobel prizes will be announced next week, but a different prize, the Lasker prize, was given to scientists responsible for a huge leap forward in our understanding of how proteins fold up.

The scientists however, unlike Franklin, Watson and Crick, didn’t work out the structures of the proteins themselves. They built a computer programme that does it by machine learning. The programme is known as AlphaFold, made by DeepMind, a subsidiary of Alphabet (the parent company of Google).

The company set out to solve the problem of protein folding with machine learning, where the computer uses statistical models fed with vast quantities of data in order to find a solution, without the scientists using the model knowing why.

AlphaFold has done very well in protein prediction tests, and appears to be breaking barriers in understanding at an unprecedented rate.

A lot of people want to know about protein shapes. This is not just in order to understand how our own bodies work, but also to invent new drugs and therapies, so it’s a key target of the pharmaceutical industry.

But AlphaFold has been making its results public. Last week (September 19 2023), another offshoot of the AlphaFold project was published as AlphaMissense.

There, the team looked at every protein that is produced in humans, and all of the individual “mistakes” – substitutions of single amino acids – that can be made.

They classified each by machine learning as likely benign, or likely pathological to humans. The article has been criticised because although the authors have shared a lot of their data openly, they haven’t published the whole model, which would allow others to do the same thing with non-human species.

The reason cited is safety. Such a model might allow for the engineering of a harmful bioweapon.

One online commenter on Reddit cited a similar case of a pharmaceutical company that had been designing drugs with low toxicity, but realised that the same software could design new chemical weapons by simply flipping the switch to select for high toxicity.

That’s scary, but as Oppenheimer knew, and the Convention on Chemical Weapons testifies, there are plenty of extremely toxic materials out in the world already. In fact, commentators agree an important element is simply that the company wants to protect its Intellectual Property (IP).

Developing atomic weapons takes such huge resources that only nation states can contemplate it. In contrast, designing novel proteins with computers could feasibly be done by small organisations, or even individuals.

It has the potential to be incredibly powerful. That power has clear consequences for the construction of harmful agents too. Like the atomic bomb makers, the scientists are creating world-shaping materials, and controlling the access of this information.

What should our response to important but potentially dangerous information be? It's a really important question. Should this research be stopped? Should it be secret? Whose responsibility do we want it to be to decide who can access powerful or harmful computing? Is the answer to that really Google?