This is the last article you can read this month

You can read more article this month

You can read more articles this month

Sorry your limit is up for this month

Reset on:

Please help support the Morning Star by subscribing here

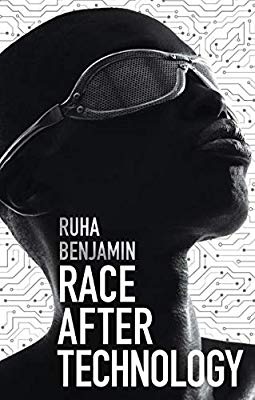

Race After Technology: Abolitionist Tools for the New Jim Code

By Ruha Benjamin

(Polity Press, £14.99)

Race After Technology, by prominent sociologist Ruha Benjamin, explores the increasingly important but little-understood topic of how new technologies are used to deepen racial oppression.

Machine learning algorithms are taking over a wide variety of functions that were previously carried out by humans.

These include filtering CVs to identify the best candidates, determining crime hotspots for increased police deployment, delineating tumours from tissues in preparation for radiotherapy treatment, navigating cars, and much more.

In theory, these algorithms not only reduce costs but provide better, more reliable results, since they’re free from the subjective whims of capricious humans.

For example, a study a few years ago indicated that judges made more lenient sentencing decisions just after lunch.

Algorithms don’t typically have a concept of lunch, and therefore their sentencing decisions don’t vary before or after it.

The problem is that algorithms aren’t objective; since they’ve been trained on real-world data, they tend to systematise and entrench the prejudices that exist in the real world.

Benjamin gives the example of an app that claims to use artificial intelligence methods to give an objective assessment of beauty.

The training data for the beauty algorithm is based, of course, on thousands of subjective human assessments of beauty.

The algorithm searches for visual patterns that correspond to the data’s consensus of what beauty means, and encodes it.

But in a culture that has exalted whiteness for hundreds of years, it’s easy to see how this ends up enforcing racist ideology.

A particularly worrying example is that of predictive policing — machine learning systems that are already being deployed in many cities throughout the world, which tell police patrols where to go in order to prevent crime.

The seed data for these algorithms are arrest records, which obviously reflect decades of racial profiling.

Once encoded in an algorithm, this racial profiling suddenly takes on a veneer of objectivity and is suddenly beyond challenge. Accountability goes out the window.

Far from being a panacea against discrimination, in many situations algorithms discriminate more than their human counterparts, because at least a certain proportion of humans are anti-racist and are conscious of the need to actively avoid bias.

The author’s name for this algorithmic discrimination is “The New Jim Code,” referencing Michelle Alexander’s 2010 book The New Jim Crow about how, in the post-civil rights era, systematic racial discrimination persists in the form of the mass incarceration of African-Americans.

Benjamin describes the New Jim Code as “the employment of new technologies that reflect and reproduce existing inequities but that are promoted and perceived as more objective or progressive than the discriminatory systems of a previous era.”

This New Jim Code, rolled out by private corporations with little or no public accountability, is being used to allow racist ideology to “enter through the back door of tech design.”

Benjamin suggests solutions in the form of abolitionist tools — applications that specifically work against discrimination (for example, an app that crowd-funds bail money for black prisoners), education programmes that help people understand new technologies and how to safely engage with them, strikes and boycotts to prevent systematic discriminatory behaviour by tech companies, and democratic algorithm auditing processes that evaluate computer programmes on the extent to which they prioritise profit over social good.

A thread that deserves to be followed up more is that of ownership.

The heart of the problem with AI is the ownership model. The tech platforms we use every day exist for one clear purpose: to make money for shareholders. For private companies, profit will always trump social good.

We need to look at moving to systems of public ownership — or at least public accountability and regulation — that can give us useful services without all the discrimination, dehumanisation and consumerism.

The book suffers a bit from an excess of opaque academic language — sometimes it feels like it’s aimed more at sociology graduates than the wider public.

Also it’s unfortunate that the book amplifies some hackneyed tropes borrowed from the US State Department.

For example, Benjamin imagines a hypothetical situation in which “the Zimbabwean government has contracted a China-based company to track millions of Zimbabwean citizens,” via software that will end up being used for “neocolonial extraction for the digital age.”

It’s ironic that, in a book about tackling racist prejudice, the reader is treated to imagined scenarios that vilify two countries — China and Zimbabwe — that refuse to play by the rules of an imperialist world order that is fundamentally racist.

Criticisms notwithstanding, Race After Technology shines light an important issue that socialists need to understand and engage with.

Carlos Martinez